How robots move

June 13, 2021

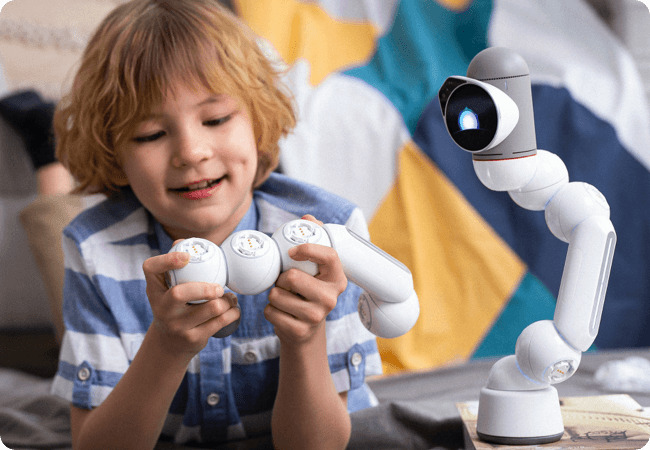

ClicBot – a robot for teaching children programming and English

July 10, 2021In this article, I will discuss how robots see and we will touch on two issues: computer vision and artificial intelligence. Robots are no longer blind and are watching us what we do and learning from us.

Table of Contents

Many cameras already know how to detect a human head and smile, which means the camera understands where it's pointed and what it's capturing. So how does it work?

Unmanned cars have not yet become widespread in Ukraine, but they already easily maneuver in traffic and stop in front of pedestrians, on the street CCTV cameras understand and recognize a person's face. And robots already know how to do all this stuff, too. These miracles are possible thanks to computer vision.

This article will help to understand human and computer vision, their differences and benefits to people.

We humans don't give much thought to our eyesight, but when building a robot, you have to take everything into account. Which means the robot needs cameras, sensors, and sensors to see. You need a computer to process the picture. And artificial intelligence to analyze the data. All levels are important and we will elaborate on each one.

Level one: getting the picture

First, the robot needs to see something. This is where he is assisted by cameras, sensors and detectors. The more devices, the better it will navigate in space, but there are also more calculations, which means you need a more powerful computer. If the robot is moving, it is best to use lidar to determine the distance to objects, or at least a ToF camera. If you need to recognize an object, you need a camera. Often all devices work in cooperation.

Smart robots such as Promobot use an array of microphones to detect humans. They are located around the robot (8 pieces) and when a human voice is heard, the robot realizes where the sound is coming from and turns to the speaker. Another variant of using microphones, if there are several people in front of the robot, then the robot understands who to talk to, thanks to the sound coming from a particular person.

So a robot to see - needs microphones too!

Lidar

Today - this device is the most modern and promising for determining the distance to objects and is increasingly used in robotics. In the early days of use - lidar cost a lot of money and was rarely used. On unmanned vehicles, lidar is used everywhere and uses its laser to determine the distance to objects. And yes, iPhones have already gotten this technology too.

The advantages include high measurement accuracy and good visibility in light and darkness, in open areas and indoors. Disadvantages include poor recognition of transparent and reflective surfaces.

ToF camera

Another device for determining distance is the ToF camera (ToF - Time-of-flight). The main difference is that it emits light in the infrared range to determine distance and builds an image from the reflected light.

This camera can see objects at a distance of 5 meters or more, and with its help you can also build a map of the room, as well as recognize a person's face. Like a laser, it emits light in a range invisible to the human eye.

Our lives are only now being filled with such cameras, as 10 years ago lidar cost a hundred thousand bucks. And now they're being built into robots and wearable gadgets.

The robots use lidars and ToF cameras to build a map of the premises and memorize it in order to navigate and this allows them to drive confidently without collisions. And a powerful processor handles all the information, but that's for another article.

But we must realize that in addition to the advantages there are disadvantages, as long as still lidars and ToF cameras have small resolution. Even in automotive lidars, the resolution ranges from 64 to 128 lines. Therefore, manufacturers have to choose what to scan and some areas may not fall within the field of view. That's where cameras come in. This is an additional opportunity for the robot to see blind spots.

Level two: processing

Once all the information from the sensors has been received, it needs to be processed. In humans, this process happens imperceptibly, the brain itself performs such an operation without thinking. But the robot has to lay down an algorithm of actions and it has to work hard to "digest" all the data.

Lidar provides information in the form of a three-dimensional point cloud that can be easily processed.

The robot sees the person and will need to determine the size of the person and where on the map they are located. Dimensions are difficult for a robot to calculate, one way is the "truncated pyramid". All detected objects are placed in a cone and the volume of the cone is calculated by the neural network. And we move smoothly to the third level.

Level three: analysis

Good robots have neural networks to analyze the data in the images. It would take thousands of articles like this one to tell you about them. And in short, it's a lot of equations that have a relationship to each other. By putting the data into a neural network, it analyzes it and gives you an answer. For example, if you point out people's faces to a neural network, it will train itself and start to understand where the faces are.

The process is in three steps, the first one is neural networks shown faces, the second one is shown different pictures not only faces and if it has identified faces without errors then the neural network is trained, the third one is reducing the size of the network for speed and its optimization. The neural network can now be put to work.

Scientists have created this technology based on our brains. A person repeats something a certain number of times, memorizes it, in the process of such repetitions neural connections are strengthened and understanding comes. Robots should be taught all the objects that surround him to understand what he sees and it was easy for people to communicate and interact with him. Robots are getting smarter every year, and a robot with neuronics will get smarter without human input.

Vision - it's in your mind.